Most people have probably heard the term site map and robots.txt utilized in conjunction with a selected platform or website. Surprisingly, not many business owners are at home with the location.xml map and robots.txt.

Because of its complexity in understanding its use, it’s going to be the primary reason why retailers and business owners may consider it a giant deal. These parts are often a

vital influence on business building and customer engagement.

In this review, we’ll be getting in the key differences and importance of Robot.txt and Sitemap.xml. Before we dive, we first have to discuss some points that will facilitate you to know the structure of those parts.

Crawling and Indexing

Search Engines like Google have internet bots, also called web crawlers or spiders, for browsing the globe wide web. These crawler bots flick through all the publicly available web content follow links on them and convey them back to their parent server (Eg. Google), where they’re indexed. The indexing procedure of the search engines is extremely the same as that of books, where the keywords and phrases together with associated pointers are listed to form related material easily accessible. Similarly, the planet-wide web is an ever-growing library of web content, and therefore the crawlers render through this web content addressing the keywords and key signals to create an index. Servers just like

The Google search index contains billions of websites that may see to 100 million gigabytes of knowledge which helps us get the most desirable search results.

Sitemaps and Robots

What Does Mean by XML Sitemap?

To make this whole indexing process more efficient, the website owners provide Google with an information file about the pages, videos, and other files referred to as a sitemap. A sitemap provides information just like the most significant files of the website or maybe deeper information like when the page was last updated or any alternate language versions of the page. It also provides any specific information about content like videos, images or news. For example:

A sitemap video entry can specify the video period, category, and age-appropriateness rating.A sitemap image entry can include the image material, type, and license.

A sitemap news entry can have the article title and publication date.

Generally, large and complicated websites with rich media content (videos, images, news, etc.) or a website whose pages are isolated and have only a few external links require site mapping. On the opposite hand, crawlers can easily render through smaller websites with less media content and a correct internal links and navigation system between pages.

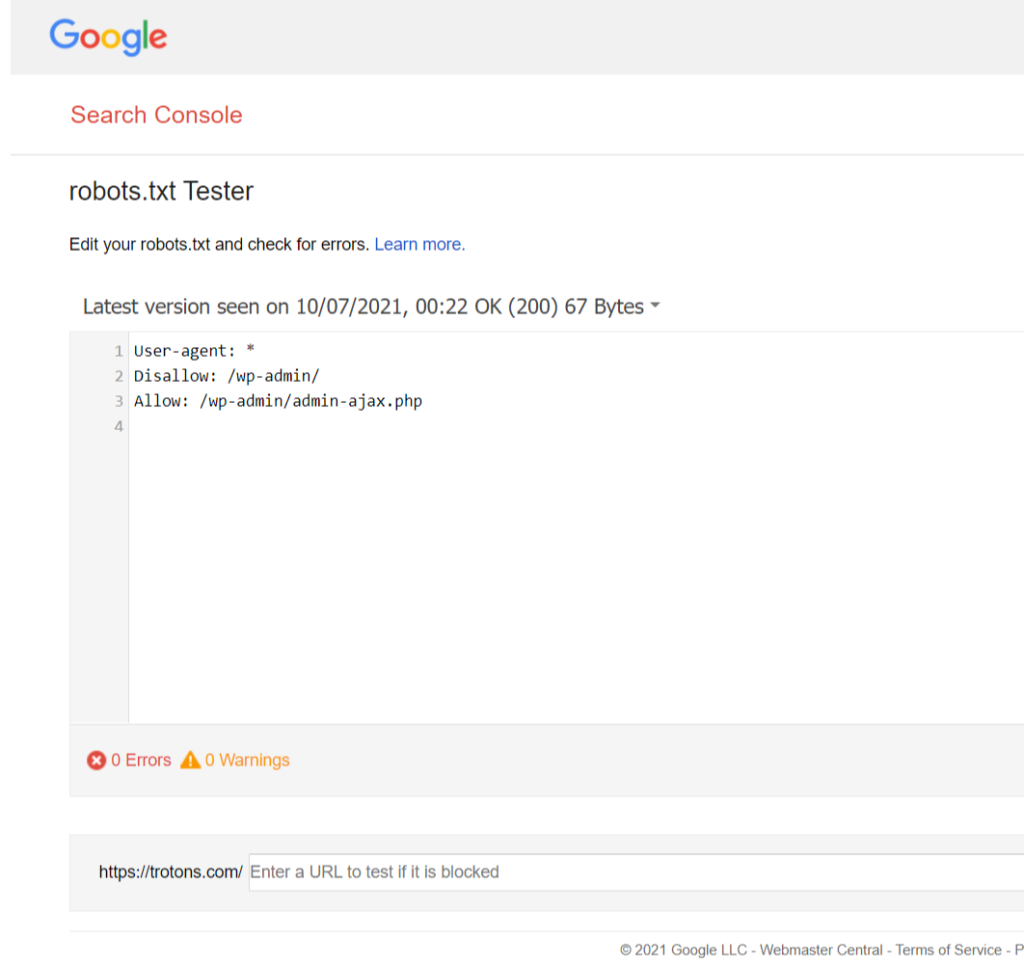

What Does Mean by Robots.txt file?

The website owners may direct or restrict the crawlers from browsing any specific content or page of their website employing a robots.txt file. it’s generally wont to avoid overloading websites with requests and not a mechanism to stay the net pages out of Google.

Although these instructions cannot enforce the crawler’s behavior, it’s upon the crawler to obey them to manage crawling traffic. These instructions also do not apply to all or any search engines. Google crawlers may follow most of them, but crawlers of other search engines might not. Hence, it’s better to use other blocking methods, like password-protecting private files on your server to keep information secure from web crawlers.

What is included in a robots.txt file?

The two simple lines above represent a whole robots.txt file, however many lines of user-agents and directives may be written to provide specific instructions to every bot. If you would like your robots file to permit all user agents to look at all pages, your file would appear as this:

Robots file for any website are often found by typing we can give you an example of a website is XYZ so you can type there for example -www.xyz.com/robots.txt

Note: Robots files are different for most domains and sub-domains. So, we cannot control the sub-domain

Which websites need a site map?

Google states that sitemaps are useful for websites with the subsequent criteria

- Big websites

- Websites with lots of offline content

- New websites

- Websites with rich media content

Are There Any Risks with Having a Sitemap?

Site maps are an unusual, beautiful a part of SEO. Having a sitemap isn’t a risk to websiteowners – only benefits.

While Google made the purpose that it couldn’t guarantee that its bots would even be ableto read the location map.

In summary, Google has confirmed that you just won’t be penalized for site mapping which it should be helpful for your website. therewith information, you’ll see why every website that wishes to be measured on Google needs it. Whenever I start working with a client in an SEO campaign, I always recommend a site mapas part of the process.

That’s it for this article. Let us know what topic should we cover in our next article.